X Corp, owned by Elon Musk, is challenging California’s new law that requires online platforms to remove or label campaign-related deepfakes before and after an election. Musk argues that this law violates the constitutional right to free speech and the content-platform protections in Section 230 of the federal Communications Decency Act. The lawsuit aims to block the enforcement of this law, known as the Defending Democracy from Deepfake Deception Act.

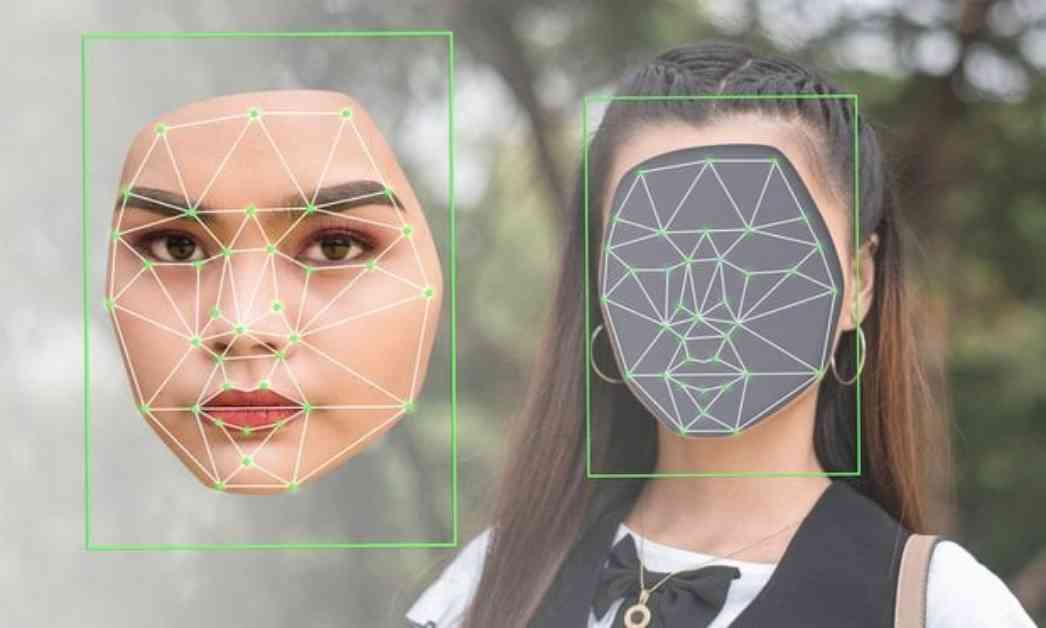

Deepfake technology involves manipulating videos to match facial movements with a different face, creating a realistic but fake video. This technology has raised concerns about its potential misuse during election campaigns and other political events. California’s new law is an attempt to address these concerns by holding online platforms accountable for controlling the spread of deepfake content.

However, Musk believes that this law goes too far in restricting free speech and imposing additional responsibilities on content platforms. Section 230 of the Communications Decency Act provides certain protections to online platforms, shielding them from legal liability for content posted by users. Musk argues that forcing platforms to remove or label deepfakes could undermine these protections and lead to censorship of legitimate content.

The legal battle between X Corp and the state of California highlights the complex issues surrounding deepfake technology and online content moderation. While there is a growing recognition of the need to address the spread of misinformation and fake news online, there are also concerns about the potential impact on free speech and the responsibilities of online platforms.

As technology continues to advance, lawmakers and tech companies will need to work together to find a balance between protecting users from harmful content and upholding fundamental rights such as free speech. The outcome of this lawsuit could have significant implications for the regulation of deepfake technology and the responsibilities of online platforms in controlling the spread of misleading information.

In the meantime, the debate over deepfakes and online content moderation is likely to continue as technology evolves and new challenges emerge. It is essential for policymakers, tech companies, and other stakeholders to engage in constructive dialogue to address these issues and ensure a safe and transparent online environment for all users.